The annoying part of the previous model was that light training took an hour, and the results were not a pleasant thing. In this part, we will discuss the combination of two techniques to make the network training faster!

The intuitive solution is to take a higher learning rate when starting training, and keep decreasing this value as the number of iterations increases. This makes sense, because at the beginning we are far from the global best, we want to make great strides in the direction of the best; but the closer the best is, the more cautious we will move forward, so as not to step past. For example, you are going home by train, but when you enter the house, you must walk in and not let the train drive in.

The importance of initialization and momentum in deep learning is the title of the talks and papers by Ilya Sutskever et al. There, we learned another useful technique to promote deep learning: to increase the momentum parameters of the optimization method during training.

In our previous model, we initialized the learning rate and the learning potential to static 0.01 and 0.9. Let's change these two parameters so that the learning rate decreases linearly with the number of iterations, while increasing the learning momentum.

NeuralNet allows us to update parameters via the on_epoch_finished function during training. So we pass a function to on_epoch_finished so that this function is called after each iteration. However, before we change the two parameters of learning rate and learning potential, we must change these two parameters to Theano shared variables. Fortunately, this is very simple.

Import theano

Def float32(k):

Return np.cast['float32'](k)

Net4 = NeuralNet(

# ...

Update_learning_rate=theano.shared(float32(0.03)),

Update_momentum=theano.shared(float32(0.9)),

# ...

)

The callback function or callback list we pass requires two arguments when called: nn, which is an instance of NeuralNet; train_history, which is the same value as nn.history.

Instead of using a hard-coded ruined function, we'll use a parameterizable class in which we define a call function as our callback function. Let's call this class AdjustVariable, the implementation is quite simple:

Class AdjustVariable(object):

Def __init__(self, name, start=0.03, stop=0.001):

Self.name = name

Self.start, self.stop = start, stop

Self.ls = None

Def __call__(self, nn, train_history):

If self.ls is None:

Self.ls = np.linspace(self.start, self.stop, nn.max_epochs)

Epoch = train_history[-1]['epoch']

New_value = float32(self.ls[epoch - 1])

Getattr(nn, self.name).set_value(new_value)

Now let's put these changes together and start preparing for the training network:

Net4 = NeuralNet(

# ...

Update_learning_rate=theano.shared(float32(0.03)),

Update_momentum=theano.shared(float32(0.9)),

# ...

Regressive=True,

# batch_iterator_train=FlipBatchIterator(batch_size=128),

On_epoch_finished=[

AdjustVariable('update_learning_rate', start=0.03, stop=0.0001),

AdjustVariable('update_momentum', start=0.9, stop=0.999),

],

Max_epochs=3000,

Verbose=1,

)

X, y = load2d()

Net4.fit(X, y)

With open('net4.pickle', 'wb') as f:

Pickle.dump(net4, f, -1)

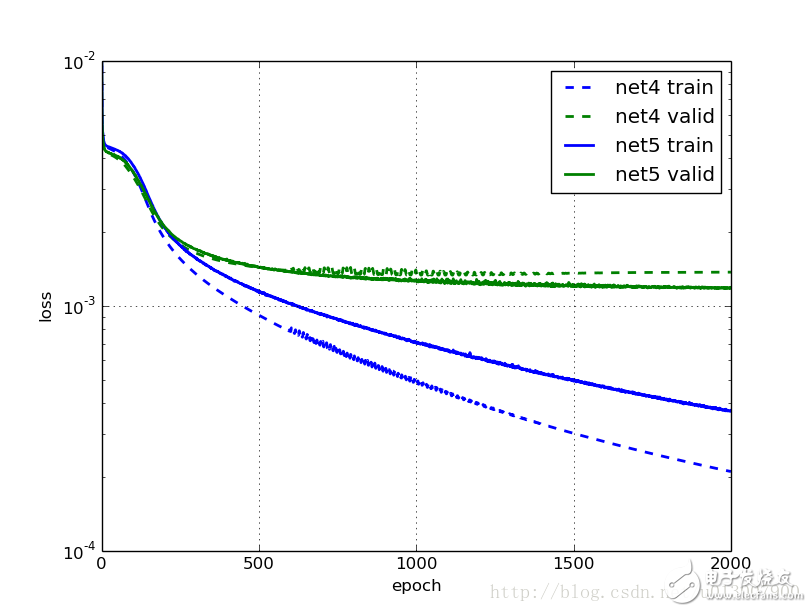

We will train two networks: net4 does not use our FlipBatchIterator, net5 is used. Other than that, they are the same.

This is the study of net4:

Epoch | Train loss | Valid loss | Train / Val

--------|--------------|--------------|----------- -----

50 | 0.004216 | 0.003996 | 1.055011

100 | 0.003533 | 0.003382 | 1.044791

250 | 0.001557 | 0.001781 | 0.874249

500 | 0.000915 | 0.001433 | 0.638702

750 | 0.000653 | 0.001355 | 0.481806

1000 | 0.000496 | 0.001387 | 0.357917

Cool, training happens faster! Before we adjusted the learning speed and the learning momentum, the training error in the 500 and 1000 generations was half of that in the previous net2. This time, the degree of generalization seems to have stopped improving after about 750 periods; training that seems meaningless is longer.

What happens after net5 uses data expansion?

Poch | Train loss | Valid loss | Train / Val

--------|--------------|--------------|----------- -----

50 | 0.004317 | 0.004081 | 1.057609

100 | 0.003756 | 0.003535 | 1.062619

250 | 0.001765 | 0.001845 | 0.956560

500 | 0.001135 | 0.001437 | 0.790225

750 | 0.000878 | 0.001313 | 0.668903

1000 | 0.000705 | 0.001260 | 0.559591

1500 | 0.000492 | 0.001199 | 0.410526

2000 | 0.000373 | 0.001184 | 0.315353

Again, we have faster training than net3 and better results. After 1000 iterations, the result is better than the net3 iteration 3000 times. In addition, models using data expansion training are now about 10% better than models without data expansion.

Discard tips (Dropout)

Description of High Flame Retardant Cord End Sleeves

High flame retardant braided sleeving is braided by UL 94 VO grade raw material PET mono filaments. It has excellent expandability, abrasive resistance and high flame retardant. Its flame rating can be up to VW-1.

Application electronics, automobile, high-speed rail,aviation, marine and switch cabinet wire harnessing applications where cost efficiency and durability are critical. The unique braided construction and wide expandability allows quick and easy installation over large connectors and long runs.

Ease of installation and nearly complete coverage makes fray resistance braided sleeve an ideal solution for many industrial and engineering applications.

The Cable Wire Sleeve has smooth surface, bright color and various patterns. It is an ideal product for line management and bundle application of electronics, automobiles, airplanes, ships, industry and home. For example, DVI wire mesh sets, HDMI wire net sets, motor vehicle engine line dressing, home theater wire management, computer chassis wire layout, office line management, car wiring harness, water tube protection.

Cord End Sleeves,Abrasion Resistant Wire Sleeve,Cable Sleeve For Electrical Wires,Flexible Expandable Cable Sleeve,Expandable Cable Sleeve

Shenzhen Huiyunhai Tech.Co.,Ltd , https://www.hyhbraidedsleeve.com